Agriculture, like many industries, is continuously evolving through technological innovations. One example is precision agriculture—a practice that employs data collection and analysis to optimize the use of inputs such as water, fertilizers, and pesticides based on local environmental conditions at the sub-field level. Artificial Intelligence (AI) and the availability of low-cost sensors have renewed interest in precision agriculture and have broadened areas of application to the livestock sector. Falling costs have further increased the accessibility of these tools to smallholder farmers in low- and middle-income countries (LMICs).

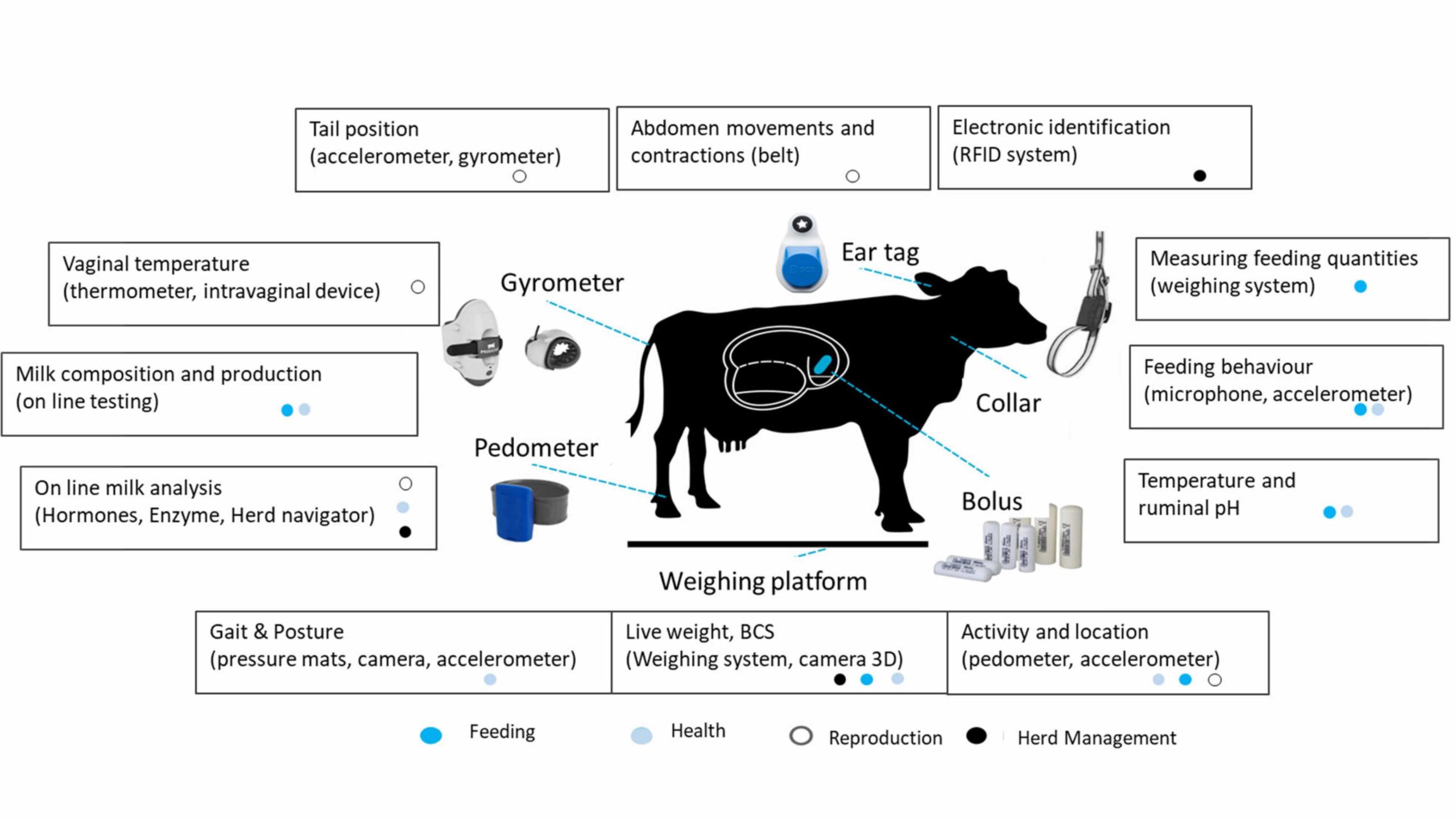

In the livestock sector, sensors attached to animals or installed in barns can monitor physiological and environmental parameters in real time. Combined with AI-based pattern recognition, these tools can detect animal health issues (e.g., lameness) using image data or respiratory diseases (e.g., coughing) through sound analysis. This not only improves animal welfare but also enables early interventions, reducing the need for antibiotics and helping prevent larger disease outbreaks.

Yet, despite their promise, AI tools can raise serious concerns for farmers—particularly when the reasoning behind predictions is unclear. Fears of error, surveillance, or loss of control can undermine trust. If tools are seen as opaque or externally imposed, they may simply go unused. Making AI intuitive and transparent and aligning it with farmers’ realities is essential to ensure it supports, rather than complicates, decision-making in livestock systems.

Why explainability matters

Unlike the rule-based systems of conventional computer programming, in which the decision-making logic is predefined and transparent, many AI models operate as “black boxes”—making it difficult or impossible for users and often even developers to understand exactly how certain conclusions are reached.

The black box issue presents a particular trust challenge for AI in agriculture. If farmers are using an AI app for guidance on agricultural practices—making decisions that could affect their livelihoods—but don’t understand the rationale for AI-based recommendations, they may disregard or reject the recommendations or the entire system.

For instance, an AI-driven irrigation scheduling tool could suggest reducing irrigation applications based on weather forecasts and data from soil moisture sensors, while a livestock monitoring system could recommend adjusting feed composition based on pasture quality or weight gain objectives. If these system-generated recommendations are not in line with a farmer’s own judgment and no explanation is provided, farmers might well favor their experience and intuition over AI-generated recommendations.

This is why explainability—the concept of revealing an AI model’s decision-making process to users in a transparent and comprehensible way—is crucial for AI in agriculture. “Explainability” means that systems communicate how and to what extent the underlying data (e.g., weather conditions or animal health parameters) influenced a decision.

Offering understandable explanations to support such assessments is not only key to building trust, but also an important aspect of ethical and transparent AI research. It ensures that users and study participants can make informed decisions and fully understand what they are consenting to when interacting with or relying on AI systems.

Typically, explainability is part of the internal AI development process, helping engineers interpret and improve model behavior. But we must also consider the end users. Farmers as end users are likely less interested in the inner workings of an AI model than in a clear interpretation of its results and the contributing factors.

Challenges in implementing explainability

The question of what a good explanation for an AI model looks like comes down to a number of factors. A good starting point is to ask who the intended audience for the explanation is.

Beyond a general focus on farmers, AI explainability in agriculture can benefit further from a better understanding of user groups, given the vast diversity of agricultural systems: Farms differ greatly in size, specialization, technology use, expertise, and digital literacy of staff. Targeting explainability is particularly needed to support LMIC smallholder farmers.

Data protection can also be a major obstacle to adoption for many farmers. Farmers may understandably be concerned that AI tools collect data from their farms, which could then be processed or sold by tool developers. They may also worry that government agencies could request access to this data and use it to initiate or increase taxation. In the case of disease outbreaks, authorities might use farm data to justify quarantines or mandate the slaughter of livestock, with potentially devastating consequences for local livelihoods. Thus, another key part of AI explainability should be communicating where, how, and by whom farm data is processed.

A case study on explainability needs

In research conducted in Germany, we examined how dairy farmers and software providers perceive the explainability of AI-driven herd management systems, focusing on mastitis, a common and costly infection of the udder and a major concern in the dairy sector worldwide, including LMICs. Mastitis is one of the leading causes of economic loss in the dairy sector and a major driver of antibiotic use.

Early detection is possible through monitoring indicators such as body temperature, somatic cell count (measurement of somatic cells, primarily white blood cells, in a given volume of milk), and milk pH, allowing for treatment before the infection becomes severe. These parameters were tested in two related studies: the first involved 14 farmers, and the second included both the 14 farmers and 13 software providers. In all cases, the participants rated four explanation formats reflecting different ways in which the above parameters contributed to the evaluation (Figure 1).

Figure 1

The first format is text-based, using natural language descriptions that explain a cow’s state of health based on her health parameters. The second format is rule-based, using a checklist of rules that shows which parameters are normal or abnormal based on whether they are ticked or not. The third format used is a herd comparison, where the parameters of a cow are compared with those of the rest of the herd. The fourth format tested is a time series, where graphs show changes in a cow’s parameters over time.

The study results showed that explainability formats preferred by farmers differed from those favored by software developers. Farmers primarily found the time series format useful and actionable, noting the intuitive insights of changes in relevant animal parameters over time. In contrast, many of the software providers considered rule-based explanations to be more suitable due to their structured and concise overview, as they assumed that farmers are under constant time pressure and would not have time to review time series data.

Mismatches like this can have significant implications: If developers rely on inaccurate assumptions about user needs, there is a risk that the resulting tools will align poorly with the requirements of practitioners, ultimately limiting their effectiveness and adoption.

On a positive note, these insights reveal a clear opportunity for improvement: Incorporating user-centered research to better understand farmers’ workflows and needs can support the design and development of explanations that are not only technically sound but also practically useful and relevant for farmers in real-world settings.

Mengisti Berihu Girmay is a PhD student at the University of Kaiserslautern-Landau and works at the Digital Farming chair of the Computer Science department. Her research focuses on human-computer interaction in digital agriculture, particularly on the design and development of explainable decision support systems for dairy farmers. She is particularly interested in user-centered design approaches to improve transparency, trust, and acceptance of AI in agricultural contexts. Opinions are the author’s.

Referenced papers:

Girmay, Mengisti Berihu and Möhrle, Felix. (2024). Perspectives on Explanation Formats From Two Stakeholder Groups in Germany: Software Providers and Dairy Farmers.

Girmay, Mengisti Berihu, Möhrle, Felix, and Henningsen, Jens. (2024). Exploring explainability formats to aid decision-making in dairy farming systems A small case study using the example of mastitis infection.